After several years using the blogger platform for the Bits, Bytes, and Gates blog, I've decided to move the blog to a static web platform. You can find future (and previous) posts here: https://bitsbytesgates.com. See you over there!

Bits, Bytes, and Gates

Matthew Ballance's blog -- Musings on hardware and embedded software design and verification, and the EDA tools and methodologies that support them.

Saturday, February 18, 2023

Sunday, August 21, 2022

Simplifying Custom Template-Generated Content

As a verification engineer, it's quite common to work with data and code that follow a regular pattern. Having an efficient way to create this repetitive code is a significant productivity boost. While there certainly are places in the code where 'your critical generation or checking algorithm' goes, much of the structure of an agent, a test environment, etc remain the same. The same goes for other parts of the flow, such as project meta-data, test lists, etc. There are two things that keep us from just making copies of a set of 'golden' files to create the basis for a new UVM agent, project, etc: some or all of the files need to have some data substituted or changed. For example, we want to substitute the name of the new UVM agent we're creating into most of the new SystemVerilog source code.

Custom code generators have been developed for some of these tasks. These often focus on providing a domain-specific way to capture input data, such as the structure of a UVM testbench or the layout of registers in a design. But there are many more opportunities to generate template-driven code that cannot justify the investment to create a focused solution.

A few years ago, I created the Verification Template Engine (VTE) to serve my needs for generating template-driven content. I developed VTE with three user-experience requirements in mind:

- Creating a new template should be very easy, but have access to powerful generation features

- Managing the available templates should be simple for a user.

- The core tools should be generic, and make few or no assumptions about what is being generated

Conclusion

As the tutorial above illustrates, creating a new template for use with VTE is no more effort than making a few name substitutions. If you use the template more than once, you will already have received a positive return on the effort invested. While templates can be simple, you have the full power of the Jinja2 template engine when you need to do something more complex. I encourage you to check out the VTE documentation and look for opportunities where using template-driven content generation can make your life easier and make you more productive.

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.

Sunday, July 17, 2022

PyUCIS: Manipulating Coverage Data

In a prior post, we looked at how to inspect coverage as a text report and export coverage data using the PyVSC API, and view coverage graphically using the PyUCIS-Viewer. Recent enhancements have enabled the PyUCIS library to provide even more ways to manipulate coverage data. Over the next couple of posts, we’ll look at those enhancements.

New ‘ucis’ Command

PyUCIS is a library for working with the Accellera UCIS data model. It started as a library for other applications and libraries, such as PyVSC and the PyUCIS Viewer, to use to read and write data using the UCIS data model. Recent enhancements have added standalone functionality which can meaningfully be accessed from the command line.

You can find documentation for the ucis command and sub-commands in the PyUCIS documentation. Fundamentally, there are four key operations:

- Convert coverage data from one format to another

- Merge coverage data from multiple databases into a single database

- Produce coverage reports in various formats

- Obtain information about available coverage data and report formats

These commands are just a starting point. They will be enhanced over time, and more commands may be added as well. If you have suggestions for new commands and/or new capabilities for existing commands, feel free to add an enhancement request on the PyUCIS GitHub page.

Plug-in Framework

PyUCIS has added a plug-in framework with support for database formats and report formats. The goal is to make commands operating on coverage data extensible extensible from the beginning, as well as to enable the set of supported coverage-data formats and report formats to be easily extended without changing PyUCIS. I’ll devote a future post to the plug-in framework. For now, the ucis command supports listing the available coverage-data and report plug-ins. For example:

% ucis list-db-formats

libucis - Reads coverage data via an implementation of the UCIS C API

xml - Supports reading and writing UCIS XML interchange

yaml - Reads coverage data from a YAML file

New Input Format

One often-requested PyUCIS feature is the ability to merge coverage data from several input coverage databases into a single resulting coverage database. One of the first challenges I faced in implementing this functionality was how to write tests. The UCIS API is written with applications in mind. I’ve found it to be a pretty-verbose API when it comes to writing tests. Consequently, tests written directly in terms of the API aren’t particularly easy to follow from a code perspective.

I decided to define a YAML format to make it simpler to capture coverage data in an easy-to -read way. Initially, this was just for testing. However, it may also be a useful interchange format that is less verbose and complex (also, quite possibly, more simplistic) that the XML interchange format defined by the UCIS standard.

Merging Coverage Data

One consistently-requested feature for PyUCIS is the ability to merge multiple databases into a single unified coverage database. PyUCIS now supports basic merge functionality. Currently, PyUCIS performs a union merge where all unique coverage features found in all the input databases are propagated to the output database. I anticipate that more merge algorithms will need to be added over time, but hopefully this is a good start.

Let’s take a look at a very simple case. Let’s say we have two coverage-data sets shown below:

The structure of these two coverage databases is the same (same covergroup type, instance, and coverpoint). Each coverage database has 50% coverage. Let’s merge these two databases and report the coverage.

% ucis merge -if yaml -o merge.xml coverage_1.ycdb coverage_2.ycdb

We specify the two input databases, as well as their format (yaml). We specify the output database as merge.xml.

The resulting coverage report on the merged database will report 100% coverage, as expected:

% ucis report merge.xml

TYPE i1 : 100.000000%

CVP cp1 : 100.000000%

Reporting Coverage Data

Reporting is a key activity when working with coverage data. We’ve looked at the ability to browse coverage data graphically using the PyUCIS-Viewer, but getting a textual report is every bit as important. In addition to presenting information concisely, textual reports can be processed programmatically to extract key pieces of data.

We can list the currently-available report plugins using the ucis command:

% ucis list-rpt-formats

json - Produces a machine-readable JSON coverage report

txt - Produces a human-readable textual coverage report

The default report is textual. Let’s create a textual report on the YAML coverage-data above:

% ucis report -if yaml coverage.ycdb

Note that we need to specify the format of the input data (yaml). The result is a simple human-readable report of the coverage data in the database.

What if we wanted to post-process the data using a script? We certainly could extract what we need by parsing the output above, but working with data in a machine-readable format is often much simpler. Let’s report our data in JSON format:

% ucis report -if yaml -of json coverage.ycdb

Next Steps

PyUCIS continues to evolve, adding a more more hopefully-useful features at a time. Stay tuned for a future post on the plug-in interface, and the addition of more coverage-database and report formats.

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.

Sunday, June 26, 2022

Tools and Techniques to Improve YAML-File Usability

This blog post is a bit of a departure from many that I’ve created for this blog. Most of my blog posts are about things I’ve created. This post is about a collection of tools that I use in developing the things I create.

I’ve recently come back to working on some new features in PyUCIS, the Python library for accessing functional coverage data. PyUCIS provides an implementation of the Accellera UCIS, and several back-end implementations. Good tests are critical when developing new functionality and, in the case of PyUCIS, tests rely on having coverage data to manipulate. As it so happens, while the UCIS API is good for providing tools access to coverage data, it’s not a great interface for humans (and, specifically, for test writers). What test writers need is a very concise and easy-to-read mechanism to capture the coverage data on which the library should operate. How should we capture this data? A couple decades ago, I might have toyed with developing a small language grammar to capture exactly the data I needed. Today, using a mark-up language like YAML or JSON to capture such data is my go-to approach.

YAML - A Data Format for Everything and Nothing

There are many reasons for the popularity of YAML for capturing application-configuration information, such as what we need to capture coverage data. YAML’s structure of a nested series of mappings and lists lends itself to easily capturing all manner of data. Furthermore, support for reading and writing YAML is available the vast majority of programming languages.

However, the ease with which we can define new data formats, and create simple processors to accept data captured in these formats can be deceptive. It’s tempting to think that, because YAML defines a standard set of structures for capturing data, users will find it easy and intuitive to capture data in our specific format. It’s tempting to think that our format might be so simple that only a little documentation with a few examples may be more than sufficient. The truth, however, is that making our application-specific data format usable requires us to do many of the same things that we would have to do if we defined a custom language. Our YAML-based format must be fully-documented, our data processors must be robust in accepting valid content, rejecting invalid content, and not silently ignore unrecognized input. I’ve had the painful experience of coming back to a project (yep, one that I created) after a few months away and having to dig into the YAML-processing code to remember the data format.

The apparent ease with which we can access data from our application code is also a bit deceptive. Most YAML-reading libraries provide access to the data through a hierarchy of maps and list that mirrors the structure of the data. Depending on how we might want to subsequently process the data, we might first copy it to a set of custom data object, or we might access it by directly querying the maps and lists. In both cases,

The really thing about YAML, though, is that many tools exist precisely to help make a custom YAML-based format easy to use and reliable to implement. For the most part, I will focus on tools available in the Python ecosystem. However, many of these tools are equally-useful in when implementing applications in other languages. YAML-processing libraries exist in other language ecosystems as well.

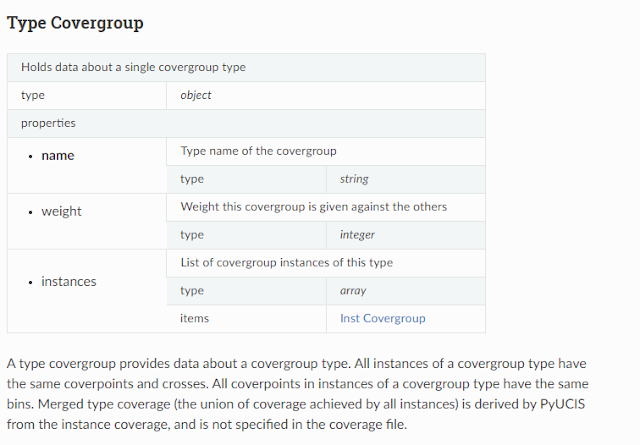

PyUCIS Coverage Example

Let’s look at the following tools in the context of the YAML data format that PyUCIS uses to capture coverage data for testing. Here’s a small example:

The example above is the first part of the schema for our coverage data. It’s a bit verbose, but notice a few things:

- The root of our document is an object (a dictionary with keys and values) with a single root element coverage

- A coverage section is an array of covergroupType. Note that the schema refers to this separate declaration, which allows it to be referenced and reused in multiple locations.

- covergroupType specifies that it is an object that has three possible sub-entries (name, weight, instances)

- Of these possible sub-entries, only ‘name’ is required

This merely scratches the surface of what is possible to describe with json-schema. There’s a bit of a learning curve, but my experience has been that it’s pretty straightforward once you learn a few fundamentals.

Once we have a schema, we can validate the data-structure returned from the YAML parser against the schema using the jsonschema Python library.

import yaml

import json

import jsonschema

with open(“coverage.yaml”, “r”) as fp:

yaml_data = yaml.load(fp, Loader=yaml.FullLoader)

with open(“coverage_schema.json”, “r”) as fp:

schema = json.load(fp)

jsonschema.validate(instance=yaml_data, schema=schema)

Validating a document prior to attempting to process the data structure from the YAML parser allows us to simplify our processing code because we can assuming that the structure of the data is correct.

Python-JsonSchema-Objects

The simplest way to obtain data is to operate directly on the data structure returned by the parser.

While this is simple and straightforward, there is at least one significant pitfall: it’s almost never a good idea to use string literals. Consider what happens if we change the name of one of our optional keywords just a bit.

weight=1

if “weight” in cg.keys():

weight = cg[“weight”]

If we neglect to update all the locations in our code that use this string literal, some of our data will simply be silently ignored. Clearly, there are some incremental steps we can take – for example, defining a constant for each string literal, making it easier to update.

Another approach is to work with classes that are generated from our schema. This approach makes it much more likely that we’ll find data misuse issues earlier, and has the added benefit of giving us actual classes to work with. I recently discovered the python-jsonschema-objects project, and used it on PyUCIS for the first time. Thus far, I’m extremely impressed and plan to use it more broadly.

The short version of how it works is as follows. python-jsonschema-objects works off of a JSON-schema document. Each section of the schema (eg covergroupType) should be given a title from which the class name will be derived. Call python-schema-objects to build a Python namespace containing class declarations. Your code can then create classes and populate them – either directly or from parsed data.

It looks like this:

import python_jsonscehma_objects as pjs

builder = pjs.ObjectBuilder(schema)

ns = builder.build_classes()

cov = ns.CoverageData().from_json(json.dumps(yaml_data))

if cov.covergroups is not None:

for cg in cov.covergroups:

print(“cg: %s” % cg.name)

The ‘ns’ object above contains the classes derived from the definitions in the schema. We can create an instance of a CoverageData class that contains our schema-compliant data just by loading the JSON representation of that YAML data. From there on, we can directly access our data as class fields.

VSCode YAML Editor

Thus far, we’ve primarily looked at tools that help the developer. The final two tools are focused on improving the user experience. Both leverage our document schema.

Visual Studio Code (VSCode) is a free integrated development environment (IDE) produced by Microsoft. In open source terms, it’s free as in beer. My understanding is that there are compatible truly open source versions as well. As with many IDEs, there is an extensive ecosystem of plug-ins available to assist in developing different types of code. One of those plug-ins supports YAML development.

So, what does having a schema allow an intelligent editor to do for us? Well, for one thing, it can check the validity of a YAML file as we type it and allow us to fix errors as we go.

It can suggest what content is valid based on where we are in the document. For example, the schema states that we can have coverpoints and crosses elements inside an instances section. The editor knows this, and prompts us with what it knows is valid.

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.

Sunday, June 12, 2022

PyVSC: Working with Coverage Data

I’ve been investing some time in documentation updates this weekend, after a couple of PyVSC users pointed out some under-described aspects of the PyVSC coverage flow. Given that these areas were under-documented in the past, it seemed a good opportunity to highlight what can be done with functional coverage data once it is sampled by a PyVSC covergroup.

So, we’ve described some functional coverage goals using a PyVSC covergroup and coverpoints, created a covergroup instance, and sampled some coverage data – perhaps it was randomly-generated stimulus or data sampled from a monitor. What now?

Runtime Coverage API

One simple thing we can do is to query coverage achieved using the coverage APIs implemented by PyVSC covergroup classes. The `get_coverage` method returns the coverage achieved by all instances of a covergroup type. The `get_inst_coverage` method returns the coverage achieved by the specified covergroup instance.

Let’s look at an example:

In the example above, we define a covergroup with a coverpoint that contains four bins (1, 2, 4, 8). We create two instances of this covergroup and sample them with two different values. After each call to sample, we display the coverage achieved by all instances of the covergroup (type coverage) and the coverage achieved by each instance.

The output from this example is shown above. After sampling the first covergroup, the coverage achieved for that, and all, instances is 25% since one of four bins was hit. After sampling the second covergroup, the coverage achieved for that covergroup instance is also 25%. Because two different bins are hit between the two covergroup instances, two of four bins are hit (50%) for type coverage.

Runtime Coverage Reports

Another way to look at collected coverage is via a coverage report. PyVSC provides two methods that are nearly identical for obtaining a textual coverage report:

- get_coverage_report – Returns the report as a string

- report_coverage – Writes the report to a string (stdout by default)

Both of these methods accept a keyword parameter named ‘details’ which controls whether bin hits are reported or just the top-level coverage achieved. Let’s look at a derivative of the first example to better understand the textual coverage report options.

This example is nearly identical to the first one, but with calls to ‘report_coverage’ instead of calls to the covergroup get_coverage methods.

The output from running this example is shown above. When reporting ‘details’ is enabled, the content of each coverage bin is reported. When reporting ‘details’ is disabled, only the top-level coverage achieved is reported. Displaying a coverage report with details is often helpful for confirming the correctness of a coverage model during development.

Saving Coverage Data

The PyUCIS library implements a Python interface to coverage data via the Accellera UCIS data model. It implements an object-oriented interface to coverage data, in addition to the Python equivalent of the UCIS C API. PyVSC uses the PyUCIS library to save coverage data, and can do so in a couple of interesting ways. Coverage data is written via the vsc.write_coverage_db method.

PyVSC can save coverage data to the XML interchange format defined by the UCIS standard. This is the default operation model for write_coverage_db. The example below shows saving it to a file named ‘cov.xml’.

PyVSC can also save coverage data to a custom database format, provided the tool that implements that database implements the UCIS C API. The example below saves coverage data to a custom database using the UCIS C API implemented in the shared library named ‘libucis.so’.

Both of these paths to saving coverage may provide ways to bring coverage data collected by PyVSC into coverage-analysis flows implemented by commercial EDA tools. Check your tool’s documentation and/or check with your application engineer to understand which options may be available. Feel free to report what works for you on the PyVSC discussion forum so that others can benefit as well.

Viewing Coverage Data

Obviously, you can use commercial EDA tools to view coverage data from PyVSC if your tool provides a path to bring UCIS XML in, or if it implements the UCIS C API. PyUCIS Viewer provides a very simple open-source graphical application for viewing coverage in UCIS XML format.

To use PyUCIS Viewer, save coverage data in UCIS XML interchange format, then run PyUICIS Viewer on that XML file:

% pyucis-viewer cov.xml

A simple tree-based graphical viewer will open to show type and instance coverage.

Conclusion

There are several options for viewing and manipulating coverage once it has been collected via a covergroup modeled with PyVSC. In a future post, we’ll look at some additional manipulation and reporting options being implemented within PyUCIS.

Until then, check out the latest additions to the PyVSC documentation and raise questions and issues on the PyVSC GitHub page.

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.

Sunday, March 27, 2022

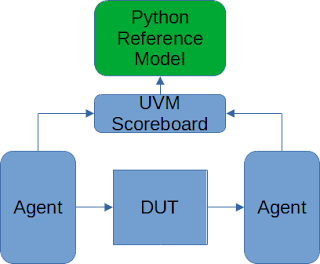

TbLink-RPC: Simplifying the Multi-Language Testbench

SystemVerilog/UVM is, by far, the most widely-used language and methodology for block and subsystem-level verification environments today. The simplicity of that statement overlooks the fact that it’s often very common to have other bits of non-SystemVerilog code connected. Maybe it’s some C/C++ code that implements a reference algorithm used by the scoreboard. Maybe it’s an instruction-set simulation model used to implement software behavior. Maybe it’s infrastructure that allows early firmware code to drive behavior in the simulation. Either way, integrating non-SystemVerilog code is a non-trival development and maintenance task, despite the fact that SystemVerilog defines a standard API (the Direct Procedure Interface – DPI).

This is the first in a series of posts describing a project that I’ve been working with a goal of simplifying this situation. The TbLink-RPC (roughly Testbench Link Remote Procedure Call) provides infrastructure that dramatically reduces the code a testbench developer needs to create in order to integrate code with a simulation environment.

Two primary experience brought me to working on the TbLink-RPC project. The first was a somewhat long history of feeling like I had to reinvent the wheel every time I needed to integrate non-SystemVerilog code into a testbench environment. The second was my interest in ‘alternative’ testbench languages and my experience using Python as a verification language.

Back in the 2017/2018 timeframe, I started to get re-involved with open-source hardware design targeting, primarily, FPGAs. When you’re designing gateware (frankly, any software-like thing), it’s imperative to have a good test environment. I did a bit of exploration, trying out bespoke C++ testbench environments and a few other things, before landing on Python and cocotb as my test framework. A lot of this was motivated by open-source tool capabilities and community. I was committed to using open-source tools as much as possible for my open-source gateware, and open-source simulators (eg Icarus and Verilator) didn’t support sufficient SystemVerilog features to be able to use SV-UVM. After looking around a bit, cocotb seemed to have the largest community around it making it the obvious choice.

I found a lot to like about Python and cocotb for developing testbench environments. Python has a large collection of libraries, and the ability to easily incorporate these in a testbench boosted my productivity. I find the Python language easy to write and use – especially for smaller projects. Having a pure-Python testbench worked for me as a hobbyist. In many ways, that is because I create all my testbench environments from the ground up and don’t use commercial Verification IP (VIP/UVCs).

This same approach doesn’t work in most commercial environments. Testbench environments must reuse existing VIPs/UVCs (either commercial or developed in-house), and it’s common for a testbench architecture to remain mostly-unchanged across multiple design cycles. Doing a wholesale conversion to Python (or any other ‘alternative’ language) doesn’t make sense. Furthermore, bringing in small amounts of a different language has a high development and maintenance cost.

What I concluded I really wanted was a framework that would simplify the process of integrating some amount of testbench code written in any language (more precisely, any non-SystemVerilog language since the simulator already supports SystemVerilog) into a simulation environment.

Concept

I’ll get into more detail about the TbLink-RPC architecture in a future post. Fundamentally, though, the idea is to form a point-to-point connection between two environments through which pairs of objects can communicate via method calls.

There are a few things that make TbLink-RPC different from other remote-procedure-call solutions. The first is that TbLink-RPC is simulation centric. Or, rather, it is designed to work with environments that maintain a local “simulated” notion of time. To that end, TbLink-RPC supports both blocking (time-consuming) and non-blocking functions, and defines a protocol to ensure that time advances at the intended time.

TbLink-RPC is designed to support both single OS process and multi OS-process integration. Single-process integration (where both environments run in the same OS process) provide higher performance. But, single-process integration isn’t always feasible. For example, one environment might be an instruction-set simulator (eg QEMU) that must run in its own process in order to manage memory in its own highly-specialized way.

TbLink-RPC emphasizes automation and modularity. Code-generation automation is used to create the boilerplate code, minimizing user effort to integrate new APIs. The entire system is designed such that integrations created independently can be easily combined. Even better, this is done in such a way that SystemVerilog users don’t need to deal with generated DPI integration code.

Example

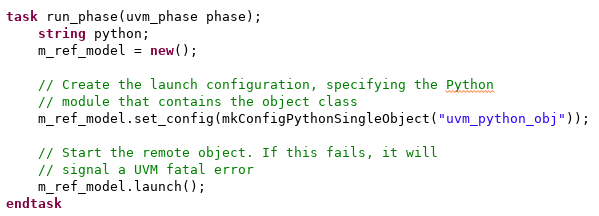

An example is often the simplest way to get across a concept, and I have a very simple one here. For now, I’ll just show the key elements of a typical use case: connecting a Python reference model to a UVM testbench environment.

Now, in this case our DUT isn’t that exciting. It’s just an adder, so our reference model is correspondingly trivial.

Our reference-model class contains a method named ‘add’ that returns the sum of two parameters passed to it. Note that we apply a decorator (tblink_rpc.iftype) to the class. This registers the class with the TbLink-RPC infrastructure. Note, also, that we apply a decorator to the ‘add’ method and specify typing annotations for the parameters and return type. Together, these register the method with TbLink-RPC and specify the parameter types to be used.

In order to call our Python class from SystemVerilog, we will need a SV class to call. That class is shown above, and combines a class from the TbLink-RPC library (TbLinkLaunchUvmObj) with some generated implementation classes created from the API definition that in Python. If our class contained methods implemented in SystemVerilog that could be called from Python, then this class would contain the implementation. Since that’s not the case, there isn’t anything to implement here.

From a UVM testbench perspective, using our Python class involves two steps:

- Launch an instance of the class in Python connected with the SV class

- Call the API

TbLink-RPC is designed to simplify integrating code into simulation environment. In the context of a SystemVerilog environment, this makes it much easier and simpler to bring in external models written in non-SystemVerilog. Over the course of the next few posts, I’ll go into a bit more detail on TbLink-RPC architecture and the nuts and bolts of the integration process.

References

- TbLink-RPC (under construction) - https://tblink-rpc.github.io/

- cocotb - https://docs.cocotb.org/en/stable/

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.

Sunday, January 23, 2022

Documenting SystemVerilog with Sphinx

As you can imagine, SystemVerilog support is critical for a project that connects into simulation environments. SystemVerilog and UVM currently are, after all, the most widely-deployed solution for block- and subsystem-level verification. But, support for other languages (C, C++, Python, etc) are important as well. Consequently, when it comes to documenting APIs, I need to cover a fair amount of ground.

Sphinx for Documenting Code

I've been using Sphinx Documentation Generator for the last couple of years. Sphinx accepts input formatted with reStructuredText mark-up and generates formatted output in HTML, PDF, and several other formats. Sphinx has a wide variety of plug-ins that help to make formatting different types of document content more productive. Sphinx was originally created for documenting Python code, and it shows. That said, its features support documenting far more than just Python code -- as we'll shortly see.

Leveraging Code Comments

Tools that generate documentation from code comments have been around for a long time. My experience has been that they're a good way to quickly create somewhat-generic documentation, provided the doc-generation tool supports the right coding language and the codebase contains enough comments. My experience has also been that documentation created from API code comments is lacking key insights from the author on the code's architecture.

One thing that I've liked about Sphinx is that it supports referencing code-comment documentation, but relies on the document author to do so. This approach encourages the documentation author to incorporate API documentation alongside explanatory text (that could be awkward to include in a code comment) instead of having large fully auto-generated API-reference sections. This approach also gives the documentation author much more control over the document structure than fully-automated API-documentation tools can typically afford to provide.

What About Non-Python Code?

As mentioned previously, Sphinx was originally a tool for documenting Python packages. As such, it provides built-in features for extracting documentation comments from Python code. The Doxygen tool is one very popular way of documenting languages such as C and C++ from code comments. The Breathe plug-in for Sphinx processes the XML output files from Doxygen, allowing Sphnix documents to bring in documentation code-comments from any language supported by Doxygen.

The good news is that Doxygen supports a wide range of languages. Using Doxygen to pull in doc comments from the C++ codebase of my project will work with relative ease. Unfortunately, though, Doxygen doesn't support SystemVerilog.

Doxygen and Filters

Believe it or not, a commonly-recommended path to support a new language with Doxygen is to write a filter script that transforms the source language (that Doxygen doesn't support) into the form of a language that Doxygen does.

As luck would have it, there is a SystemVerilog filter for Doxygen: DoxygenFilterSystemVerilog. It's written in PERL and uses regular expressions to recognize SystemVerilog constructs and translate them into C++ equivalents that Doxygen can understand. Doxygen primarily cares about constructs like classes, class fields, and function declarations. This makes an approximate-translation strategy workable.

Dealing with Packages and Namespaces

There's just one small issue that we need to solve in order to have a complete flow. SystemVerilog class-based code relies heavily on the pre-processor to assemble a set of classes under the appropriate package. In C++, a namespace is just a 'tag' that gets applied to content. Any number of files may declare content inside the same namespace with no issues. SystemVerilog, conversely, requires that a given package be declared only once and that all content in that package be declared inside that single package scope. The end result is that users sensibly place different classes in different files and 'glue' the whole thing together using include directives and the pre-processor.

If we are only documenting code from a single package, this likely wouldn't cause a problem. We could simply run the Doxygen filter on each class file. When documenting a codebase with multiple packages, we need to ensure we stay consistent with the packages in which classes are declared. How do we do this? By pre-processing the code, of course!

Putting it all Together

There really are two parts to our flow. The first is processing the SystemVerilog code to make it 'look' like C++.

That part of the flow is shown above. In this portion of the flow I'm using Verilator for the pre-processor

because of a very unique feature. Most pre-processors that I'm aware of strip out comments as part of preprocessing. Verilator allows the user to (optionally) retain comments. Since the vast majority of the 'interesting' content is found in documentation comments, this is a critical feature for this flow.

Once we have our "c++-ified" SystemVerilog, we can run that through Doxygen. This will result in some XML files containing the information and relationships Doxygen extracted from the XML code. These XML files are what the Breathe plug-in reads, and exposes to Sphinx.

As I mentioned earlier, Sphinx brings in documentation code-comment content on demand instead of automatically assembling it into a document or document section.

Looking Forward

In my current work, I'm focused on SystemVerilog classes. The approach above works well for documenting class structures, but doesn't work so well for document SystemVerilog modules and interfaces. My current thinking is to use the Sphinx Verilog Domain plug-in to document modules, and continue to use the flow above for classes. Would a single tool be better? Yes. But having two complementary tools is just fine.

Now that I have a way to document SystemVerilog class code, I'm digging into that process. If you're curious about the commands/scripts I'm using, you can have a look at the tblink-rpc-docs project and the Makefile in that project.

Hope you find this helpful, and feel free to comment back on your approach to documenting SystemVerilog classes.