This blog post is a bit of a departure from many that I’ve created for this blog. Most of my blog posts are about things I’ve created. This post is about a collection of tools that I use in developing the things I create.

I’ve recently come back to working on some new features in PyUCIS, the Python library for accessing functional coverage data. PyUCIS provides an implementation of the Accellera UCIS, and several back-end implementations. Good tests are critical when developing new functionality and, in the case of PyUCIS, tests rely on having coverage data to manipulate. As it so happens, while the UCIS API is good for providing tools access to coverage data, it’s not a great interface for humans (and, specifically, for test writers). What test writers need is a very concise and easy-to-read mechanism to capture the coverage data on which the library should operate. How should we capture this data? A couple decades ago, I might have toyed with developing a small language grammar to capture exactly the data I needed. Today, using a mark-up language like YAML or JSON to capture such data is my go-to approach.

YAML - A Data Format for Everything and Nothing

There are many reasons for the popularity of YAML for capturing application-configuration information, such as what we need to capture coverage data. YAML’s structure of a nested series of mappings and lists lends itself to easily capturing all manner of data. Furthermore, support for reading and writing YAML is available the vast majority of programming languages.

However, the ease with which we can define new data formats, and create simple processors to accept data captured in these formats can be deceptive. It’s tempting to think that, because YAML defines a standard set of structures for capturing data, users will find it easy and intuitive to capture data in our specific format. It’s tempting to think that our format might be so simple that only a little documentation with a few examples may be more than sufficient. The truth, however, is that making our application-specific data format usable requires us to do many of the same things that we would have to do if we defined a custom language. Our YAML-based format must be fully-documented, our data processors must be robust in accepting valid content, rejecting invalid content, and not silently ignore unrecognized input. I’ve had the painful experience of coming back to a project (yep, one that I created) after a few months away and having to dig into the YAML-processing code to remember the data format.

The apparent ease with which we can access data from our application code is also a bit deceptive. Most YAML-reading libraries provide access to the data through a hierarchy of maps and list that mirrors the structure of the data. Depending on how we might want to subsequently process the data, we might first copy it to a set of custom data object, or we might access it by directly querying the maps and lists. In both cases,

The really thing about YAML, though, is that many tools exist precisely to help make a custom YAML-based format easy to use and reliable to implement. For the most part, I will focus on tools available in the Python ecosystem. However, many of these tools are equally-useful in when implementing applications in other languages. YAML-processing libraries exist in other language ecosystems as well.

PyUCIS Coverage Example

Let’s look at the following tools in the context of the YAML data format that PyUCIS uses to capture coverage data for testing. Here’s a small example:

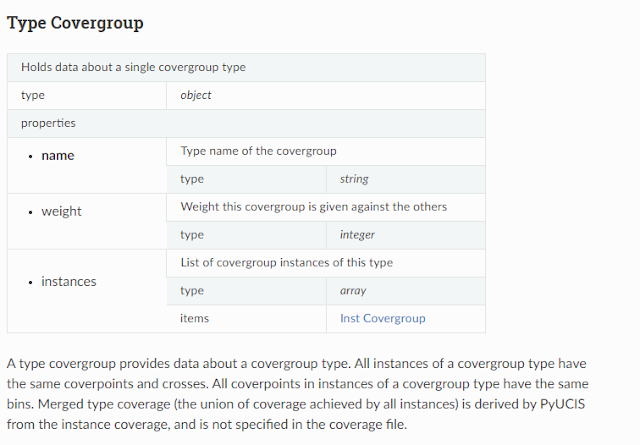

The example above is the first part of the schema for our coverage data. It’s a bit verbose, but notice a few things:

- The root of our document is an object (a dictionary with keys and values) with a single root element coverage

- A coverage section is an array of covergroupType. Note that the schema refers to this separate declaration, which allows it to be referenced and reused in multiple locations.

- covergroupType specifies that it is an object that has three possible sub-entries (name, weight, instances)

- Of these possible sub-entries, only ‘name’ is required

This merely scratches the surface of what is possible to describe with json-schema. There’s a bit of a learning curve, but my experience has been that it’s pretty straightforward once you learn a few fundamentals.

Once we have a schema, we can validate the data-structure returned from the YAML parser against the schema using the jsonschema Python library.

import yaml

import json

import jsonschema

with open(“coverage.yaml”, “r”) as fp:

yaml_data = yaml.load(fp, Loader=yaml.FullLoader)

with open(“coverage_schema.json”, “r”) as fp:

schema = json.load(fp)

jsonschema.validate(instance=yaml_data, schema=schema)

Validating a document prior to attempting to process the data structure from the YAML parser allows us to simplify our processing code because we can assuming that the structure of the data is correct.

Python-JsonSchema-Objects

The simplest way to obtain data is to operate directly on the data structure returned by the parser.

While this is simple and straightforward, there is at least one significant pitfall: it’s almost never a good idea to use string literals. Consider what happens if we change the name of one of our optional keywords just a bit.

weight=1

if “weight” in cg.keys():

weight = cg[“weight”]

If we neglect to update all the locations in our code that use this string literal, some of our data will simply be silently ignored. Clearly, there are some incremental steps we can take – for example, defining a constant for each string literal, making it easier to update.

Another approach is to work with classes that are generated from our schema. This approach makes it much more likely that we’ll find data misuse issues earlier, and has the added benefit of giving us actual classes to work with. I recently discovered the python-jsonschema-objects project, and used it on PyUCIS for the first time. Thus far, I’m extremely impressed and plan to use it more broadly.

The short version of how it works is as follows. python-jsonschema-objects works off of a JSON-schema document. Each section of the schema (eg covergroupType) should be given a title from which the class name will be derived. Call python-schema-objects to build a Python namespace containing class declarations. Your code can then create classes and populate them – either directly or from parsed data.

It looks like this:

import python_jsonscehma_objects as pjs

builder = pjs.ObjectBuilder(schema)

ns = builder.build_classes()

cov = ns.CoverageData().from_json(json.dumps(yaml_data))

if cov.covergroups is not None:

for cg in cov.covergroups:

print(“cg: %s” % cg.name)

The ‘ns’ object above contains the classes derived from the definitions in the schema. We can create an instance of a CoverageData class that contains our schema-compliant data just by loading the JSON representation of that YAML data. From there on, we can directly access our data as class fields.

VSCode YAML Editor

Thus far, we’ve primarily looked at tools that help the developer. The final two tools are focused on improving the user experience. Both leverage our document schema.

Visual Studio Code (VSCode) is a free integrated development environment (IDE) produced by Microsoft. In open source terms, it’s free as in beer. My understanding is that there are compatible truly open source versions as well. As with many IDEs, there is an extensive ecosystem of plug-ins available to assist in developing different types of code. One of those plug-ins supports YAML development.

So, what does having a schema allow an intelligent editor to do for us? Well, for one thing, it can check the validity of a YAML file as we type it and allow us to fix errors as we go.

It can suggest what content is valid based on where we are in the document. For example, the schema states that we can have coverpoints and crosses elements inside an instances section. The editor knows this, and prompts us with what it knows is valid.

The views and opinions expressed above are solely those of the author and do not represent those of my employer or any other party.